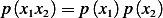

with probability

with probability  and

and  .

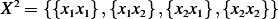

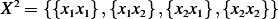

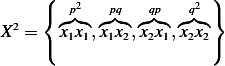

Now consider a sequence of 2 outputs as a single symbol

.

Now consider a sequence of 2 outputs as a single symbol  ,

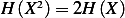

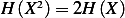

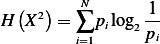

Assuming that consecutive outputs from the source are statically independent, show directly that

,

Assuming that consecutive outputs from the source are statically independent, show directly that

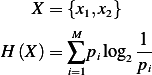

Consider a source which generates 2 symbols  with probability

with probability  and

and  .

Now consider a sequence of 2 outputs as a single symbol

.

Now consider a sequence of 2 outputs as a single symbol  ,

Assuming that consecutive outputs from the source are statically independent, show directly that

,

Assuming that consecutive outputs from the source are statically independent, show directly that

Solution

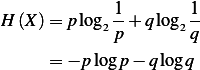

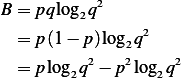

First find

Where  in this case is 2. The above becomes

in this case is 2. The above becomes

Hence  becomes

becomes

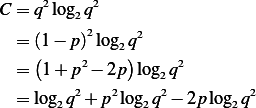

Now, we conside  Below we write

Below we write  with the probability of each 2 outputs as single symbol on top

of each. Notice that since symbols are statistically independent, then

with the probability of each 2 outputs as single symbol on top

of each. Notice that since symbols are statistically independent, then  , we

obtain

, we

obtain

Hence since

Where  now, the above becomes

now, the above becomes

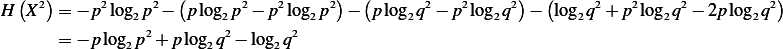

Now we will expand the terms labeled above as  and simplify, then we will obtain (1) showing the

desired results.

and simplify, then we will obtain (1) showing the

desired results.

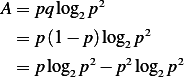

Substitute the result we found for  back into (2) we obtain

back into (2) we obtain

But the above can be written as

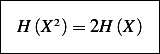

Compare (4) and (1), we see they are the same.

Hence

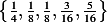

A source emits sequence of independent symbols from alphabet  of symbols

of symbols  with probabilities

with probabilities

, find the entropy of the source alphabet

, find the entropy of the source alphabet

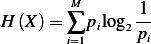

Solution

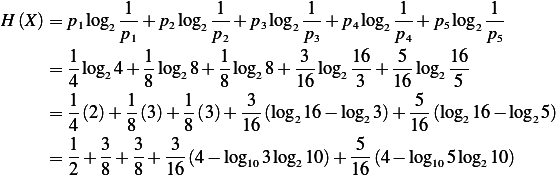

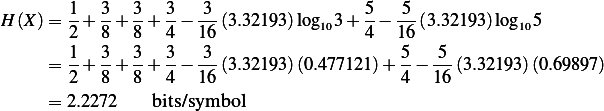

Where  , hence the above becomes

, hence the above becomes

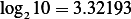

But  , hence the above becomes

, hence the above becomes

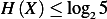

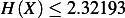

To verify, we know that  must be less than or equal to

must be less than or equal to  where

where  in this case,

hence

in this case,

hence  or

or  , therefore, our result above agrees with this upper limit

restriction.

, therefore, our result above agrees with this upper limit

restriction.